Fang Li

University of Texas at Dallas

Kimi K2.5: Visual Agentic Intelligence

Feb 02, 2026Abstract:We introduce Kimi K2.5, an open-source multimodal agentic model designed to advance general agentic intelligence. K2.5 emphasizes the joint optimization of text and vision so that two modalities enhance each other. This includes a series of techniques such as joint text-vision pre-training, zero-vision SFT, and joint text-vision reinforcement learning. Building on this multimodal foundation, K2.5 introduces Agent Swarm, a self-directed parallel agent orchestration framework that dynamically decomposes complex tasks into heterogeneous sub-problems and executes them concurrently. Extensive evaluations show that Kimi K2.5 achieves state-of-the-art results across various domains including coding, vision, reasoning, and agentic tasks. Agent Swarm also reduces latency by up to $4.5\times$ over single-agent baselines. We release the post-trained Kimi K2.5 model checkpoint to facilitate future research and real-world applications of agentic intelligence.

Structural Compositional Function Networks: Interpretable Functional Compositions for Tabular Discovery

Jan 27, 2026Abstract:Despite the ubiquity of tabular data in high-stakes domains, traditional deep learning architectures often struggle to match the performance of gradient-boosted decision trees while maintaining scientific interpretability. Standard neural networks typically treat features as independent entities, failing to exploit the inherent manifold structural dependencies that define tabular distributions. We propose Structural Compositional Function Networks (StructuralCFN), a novel architecture that imposes a Relation-Aware Inductive Bias via a differentiable structural prior. StructuralCFN explicitly models each feature as a mathematical composition of its counterparts through Differentiable Adaptive Gating, which automatically discovers the optimal activation physics (e.g., attention-style filtering vs. inhibitory polarity) for each relationship. Our framework enables Structured Knowledge Integration, allowing domain-specific relational priors to be injected directly into the architecture to guide discovery. We evaluate StructuralCFN across a rigorous 10-fold cross-validation suite on 18 benchmarks, demonstrating statistically significant improvements (p < 0.05) on scientific and clinical datasets (e.g., Blood Transfusion, Ozone, WDBC). Furthermore, StructuralCFN provides Intrinsic Symbolic Interpretability: it recovers the governing "laws" of the data manifold as human-readable mathematical expressions while maintaining a compact parameter footprint (300--2,500 parameters) that is over an order of magnitude (10x--20x) smaller than standard deep baselines.

VILTA: A VLM-in-the-Loop Adversary for Enhancing Driving Policy Robustness

Jan 19, 2026Abstract:The safe deployment of autonomous driving (AD) systems is fundamentally hindered by the long-tail problem, where rare yet critical driving scenarios are severely underrepresented in real-world data. Existing solutions including safety-critical scenario generation and closed-loop learning often rely on rule-based heuristics, resampling methods and generative models learned from offline datasets, limiting their ability to produce diverse and novel challenges. While recent works leverage Vision Language Models (VLMs) to produce scene descriptions that guide a separate, downstream model in generating hazardous trajectories for agents, such two-stage framework constrains the generative potential of VLMs, as the diversity of the final trajectories is ultimately limited by the generalization ceiling of the downstream algorithm. To overcome these limitations, we introduce VILTA (VLM-In-the-Loop Trajectory Adversary), a novel framework that integrates a VLM into the closed-loop training of AD agents. Unlike prior works, VILTA actively participates in the training loop by comprehending the dynamic driving environment and strategically generating challenging scenarios through direct, fine-grained editing of surrounding agents' future trajectories. This direct-editing approach fully leverages the VLM's powerful generalization capabilities to create a diverse curriculum of plausible yet challenging scenarios that extend beyond the scope of traditional methods. We demonstrate that our approach substantially enhances the safety and robustness of the resulting AD policy, particularly in its ability to navigate critical long-tail events.

Motion Blur Robust Wheat Pest Damage Detection with Dynamic Fuzzy Feature Fusion

Jan 06, 2026Abstract:Motion blur caused by camera shake produces ghosting artifacts that substantially degrade edge side object detection. Existing approaches either suppress blur as noise and lose discriminative structure, or apply full image restoration that increases latency and limits deployment on resource constrained devices. We propose DFRCP, a Dynamic Fuzzy Robust Convolutional Pyramid, as a plug in upgrade to YOLOv11 for blur robust detection. DFRCP enhances the YOLOv11 feature pyramid by combining large scale and medium scale features while preserving native representations, and by introducing Dynamic Robust Switch units that adaptively inject fuzzy features to strengthen global perception under jitter. Fuzzy features are synthesized by rotating and nonlinearly interpolating multiscale features, then merged through a transparency convolution that learns a content adaptive trade off between original and fuzzy cues. We further develop a CUDA parallel rotation and interpolation kernel that avoids boundary overflow and delivers more than 400 times speedup, making the design practical for edge deployment. We train with paired supervision on a private wheat pest damage dataset of about 3,500 images, augmented threefold using two blur regimes, uniform image wide motion blur and bounding box confined rotational blur. On blurred test sets, YOLOv11 with DFRCP achieves about 10.4 percent higher accuracy than the YOLOv11 baseline with only a modest training time overhead, reducing the need for manual filtering after data collection.

DVGT: Driving Visual Geometry Transformer

Dec 18, 2025

Abstract:Perceiving and reconstructing 3D scene geometry from visual inputs is crucial for autonomous driving. However, there still lacks a driving-targeted dense geometry perception model that can adapt to different scenarios and camera configurations. To bridge this gap, we propose a Driving Visual Geometry Transformer (DVGT), which reconstructs a global dense 3D point map from a sequence of unposed multi-view visual inputs. We first extract visual features for each image using a DINO backbone, and employ alternating intra-view local attention, cross-view spatial attention, and cross-frame temporal attention to infer geometric relations across images. We then use multiple heads to decode a global point map in the ego coordinate of the first frame and the ego poses for each frame. Unlike conventional methods that rely on precise camera parameters, DVGT is free of explicit 3D geometric priors, enabling flexible processing of arbitrary camera configurations. DVGT directly predicts metric-scaled geometry from image sequences, eliminating the need for post-alignment with external sensors. Trained on a large mixture of driving datasets including nuScenes, OpenScene, Waymo, KITTI, and DDAD, DVGT significantly outperforms existing models on various scenarios. Code is available at https://github.com/wzzheng/DVGT.

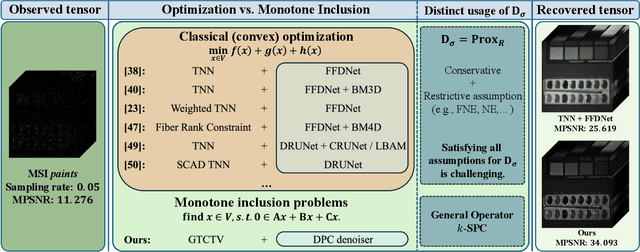

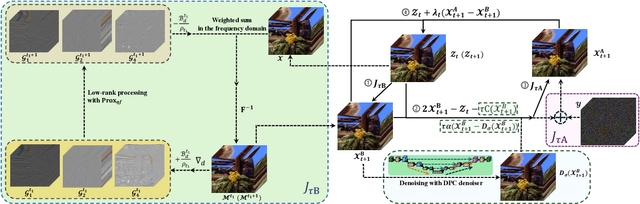

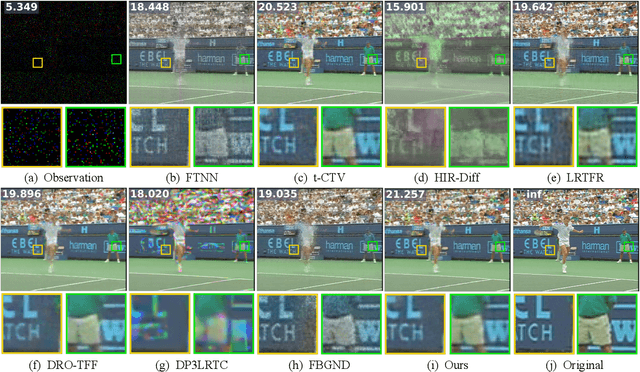

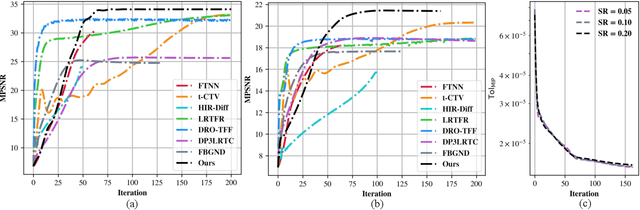

Tensor Completion via Monotone Inclusion: Generalized Low-Rank Priors Meet Deep Denoisers

Oct 14, 2025

Abstract:Missing entries in multi dimensional data pose significant challenges for downstream analysis across diverse real world applications. These data are naturally modeled as tensors, and recent completion methods integrating global low rank priors with plug and play denoisers have demonstrated strong empirical performance. However, these approaches often rely on empirical convergence alone or unrealistic assumptions, such as deep denoisers acting as proximal operators of implicit regularizers, which generally does not hold. To address these limitations, we propose a novel tensor completion framework grounded in the monotone inclusion paradigm, which unifies generalized low rank priors with deep pseudo contractive denoisers and extends beyond traditional convex optimization. Building on the Davis Yin splitting scheme, we develop the GTCTV DPC algorithm and rigorously establish its global convergence. Extensive experiments demonstrate that GTCTV DPC consistently outperforms existing methods in both quantitative metrics and visual quality, particularly at low sampling rates.

RGB-Only Supervised Camera Parameter Optimization in Dynamic Scenes

Sep 18, 2025

Abstract:Although COLMAP has long remained the predominant method for camera parameter optimization in static scenes, it is constrained by its lengthy runtime and reliance on ground truth (GT) motion masks for application to dynamic scenes. Many efforts attempted to improve it by incorporating more priors as supervision such as GT focal length, motion masks, 3D point clouds, camera poses, and metric depth, which, however, are typically unavailable in casually captured RGB videos. In this paper, we propose a novel method for more accurate and efficient camera parameter optimization in dynamic scenes solely supervised by a single RGB video. Our method consists of three key components: (1) Patch-wise Tracking Filters, to establish robust and maximally sparse hinge-like relations across the RGB video. (2) Outlier-aware Joint Optimization, for efficient camera parameter optimization by adaptive down-weighting of moving outliers, without reliance on motion priors. (3) A Two-stage Optimization Strategy, to enhance stability and optimization speed by a trade-off between the Softplus limits and convex minima in losses. We visually and numerically evaluate our camera estimates. To further validate accuracy, we feed the camera estimates into a 4D reconstruction method and assess the resulting 3D scenes, and rendered 2D RGB and depth maps. We perform experiments on 4 real-world datasets (NeRF-DS, DAVIS, iPhone, and TUM-dynamics) and 1 synthetic dataset (MPI-Sintel), demonstrating that our method estimates camera parameters more efficiently and accurately with a single RGB video as the only supervision.

Compositional Function Networks: A High-Performance Alternative to Deep Neural Networks with Built-in Interpretability

Jul 28, 2025

Abstract:Deep Neural Networks (DNNs) deliver impressive performance but their black-box nature limits deployment in high-stakes domains requiring transparency. We introduce Compositional Function Networks (CFNs), a novel framework that builds inherently interpretable models by composing elementary mathematical functions with clear semantics. Unlike existing interpretable approaches that are limited to simple additive structures, CFNs support diverse compositional patterns -- sequential, parallel, and conditional -- enabling complex feature interactions while maintaining transparency. A key innovation is that CFNs are fully differentiable, allowing efficient training through standard gradient descent. We demonstrate CFNs' versatility across multiple domains, from symbolic regression to image classification with deep hierarchical networks. Our empirical evaluation shows CFNs achieve competitive performance against black-box models (96.24% accuracy on CIFAR-10) while outperforming state-of-the-art interpretable models like Explainable Boosting Machines. By combining the hierarchical expressiveness and efficient training of deep learning with the intrinsic interpretability of well-defined mathematical functions, CFNs offer a powerful framework for applications where both performance and accountability are paramount.

Kimi K2: Open Agentic Intelligence

Jul 28, 2025

Abstract:We introduce Kimi K2, a Mixture-of-Experts (MoE) large language model with 32 billion activated parameters and 1 trillion total parameters. We propose the MuonClip optimizer, which improves upon Muon with a novel QK-clip technique to address training instability while enjoying the advanced token efficiency of Muon. Based on MuonClip, K2 was pre-trained on 15.5 trillion tokens with zero loss spike. During post-training, K2 undergoes a multi-stage post-training process, highlighted by a large-scale agentic data synthesis pipeline and a joint reinforcement learning (RL) stage, where the model improves its capabilities through interactions with real and synthetic environments. Kimi K2 achieves state-of-the-art performance among open-source non-thinking models, with strengths in agentic capabilities. Notably, K2 obtains 66.1 on Tau2-Bench, 76.5 on ACEBench (En), 65.8 on SWE-Bench Verified, and 47.3 on SWE-Bench Multilingual -- surpassing most open and closed-sourced baselines in non-thinking settings. It also exhibits strong capabilities in coding, mathematics, and reasoning tasks, with a score of 53.7 on LiveCodeBench v6, 49.5 on AIME 2025, 75.1 on GPQA-Diamond, and 27.1 on OJBench, all without extended thinking. These results position Kimi K2 as one of the most capable open-source large language models to date, particularly in software engineering and agentic tasks. We release our base and post-trained model checkpoints to facilitate future research and applications of agentic intelligence.

ReCogDrive: A Reinforced Cognitive Framework for End-to-End Autonomous Driving

Jun 09, 2025

Abstract:Although end-to-end autonomous driving has made remarkable progress, its performance degrades significantly in rare and long-tail scenarios. Recent approaches attempt to address this challenge by leveraging the rich world knowledge of Vision-Language Models (VLMs), but these methods suffer from several limitations: (1) a significant domain gap between the pre-training data of VLMs and real-world driving data, (2) a dimensionality mismatch between the discrete language space and the continuous action space, and (3) imitation learning tends to capture the average behavior present in the dataset, which may be suboptimal even dangerous. In this paper, we propose ReCogDrive, an autonomous driving system that integrates VLMs with diffusion planner, which adopts a three-stage paradigm for training. In the first stage, we use a large-scale driving question-answering datasets to train the VLMs, mitigating the domain discrepancy between generic content and real-world driving scenarios. In the second stage, we employ a diffusion-based planner to perform imitation learning, mapping representations from the latent language space to continuous driving actions. Finally, we fine-tune the diffusion planner using reinforcement learning with NAVSIM non-reactive simulator, enabling the model to generate safer, more human-like driving trajectories. We evaluate our approach on the planning-oriented NAVSIM benchmark, achieving a PDMS of 89.6 and setting a new state-of-the-art that surpasses the previous vision-only SOTA by 5.6 PDMS.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge